Imagine running your test suite, and the tests pass one minute and fail the next.

These unreliable test results make you wonder if your code is working correctly.

You spend countless hours tracking false failures, wasting valuable time and slowing down your development process.

Fortunately, you can identify and fix the causes of flaky tests.

This guide will help you learn what causes flakiness and how to avoid it to get consistent test results.

Let's get started.

What are Flaky Tests?

In software testing, flaky tests are tests that produce inconsistent results even when no changes have been made to the code or test environment.

Compared to reliable tests that produce consistent results on each test run, a flaky test may pass without trouble one day and fail the next for no apparent reason.

This makes debugging a nightmare. Instead of focusing on building new features or fixing real issues, you end up spending hours troubleshooting why a previously passing test suddenly failed.

Why is it Important to Detect Flaky Tests?

Due to their changing nature, flaky tests introduce several challenges for developers and QA testers.

Here are some reasons why flaky test detection is important:

- Makes the Testing Process Unreliable: When a test does not reflect the state of your code, you start doubting whether you are catching real bugs or flakiness. This makes testers question the validity of the testing outcomes.

- Delays Continuous Integration and Deployment (CI/CD): In CI/CD environments, automated tests are run frequently to monitor build stability. A single flaky test can cause a build to fail, leading to unnecessary stress and delays in production.

- Comprises Software Quality: When developers face flaky tests repeatedly, they might start ignoring test failures as flukes. This can lead to real bugs getting dismissed along with the flaky tests and decrease the software quality.

- Wasted Time and Resources: Flaky tests make maintenance difficult, requiring manual verification and multiple reruns to find the root cause. This can delay development and waste valuable time, resources, and budget.

To avoid these problems, it is important to understand what causes flaky tests and how to avoid them.

What Are the Causes of Flaky Tests?

Here are some common causes why your tests might be flaky:

- Poor Test Design: If the tests are not fully isolated from each other or rely on a certain order followed by a previous test, they may yield mixed results in isolation or in a different order.

- Timing and Synchronization: When a test is run, it might take longer than the defined time to finish the task. This can happen due to network latency, server load, or unstable application state. This will cause the test to fail, even when the code is correct.

- Unstable Test Environment: When the test environment is not stable, it can cause flakiness. This is due to variations in the environment, such as system resources (CPU, memory), different server versions or operating systems, configurations, network speed and performance, etc.

- Concurrency Issues: When multiple tests are run in parallel, which is common in CI/CD pipelines to speed up the testing process, they might try to access the same resources at the same time. This can lead to interference and result in race conditions where the outcome of the test depends on their timing and order.

- Test Dependencies: Flakiness might result in tests that depend on external systems, such as third-party APIs, databases, or microservices.

- Non-Deterministic Code: Test code that produces different output for the same input every time can cause flakiness. For example, a test might pass or fail if test data changes during test execution due to external data sources.

- Inaccurate Statements: When developers make incorrect assumptions about sync and wait statements, the test results will not meet all of the expected outcomes. This may produce false positives or negatives.

How to Identify Flaky Tests?

Identifying flaky tests can be challenging as they are unpredictable. But with the right approach, you can detect and fix them before they cause too much trouble.

Here's how to do this:

- Analyze Test Patterns: Analyze test results and output statements to determine when and how often a test fails. Recognizing these patterns can help you narrow down the cause of the flakiness.

- Rerun Failed Tests: If you see that a test passes on some runs and fails on others without any changes to the code or environment, this is a strong indicator of flakiness.

- Run Tests in Isolation: Try running the test in isolation, without any other tests, to see if it produces the same results consistently. If it passes on its own but fails when run with others, this may be caused by test order dependency issues.

- Compare Test Results in Different Environments: Run tests on different environments and compare the results to identify flaky tests. If a test fails only in a specific environment, the flakiness might be due to environmental differences.

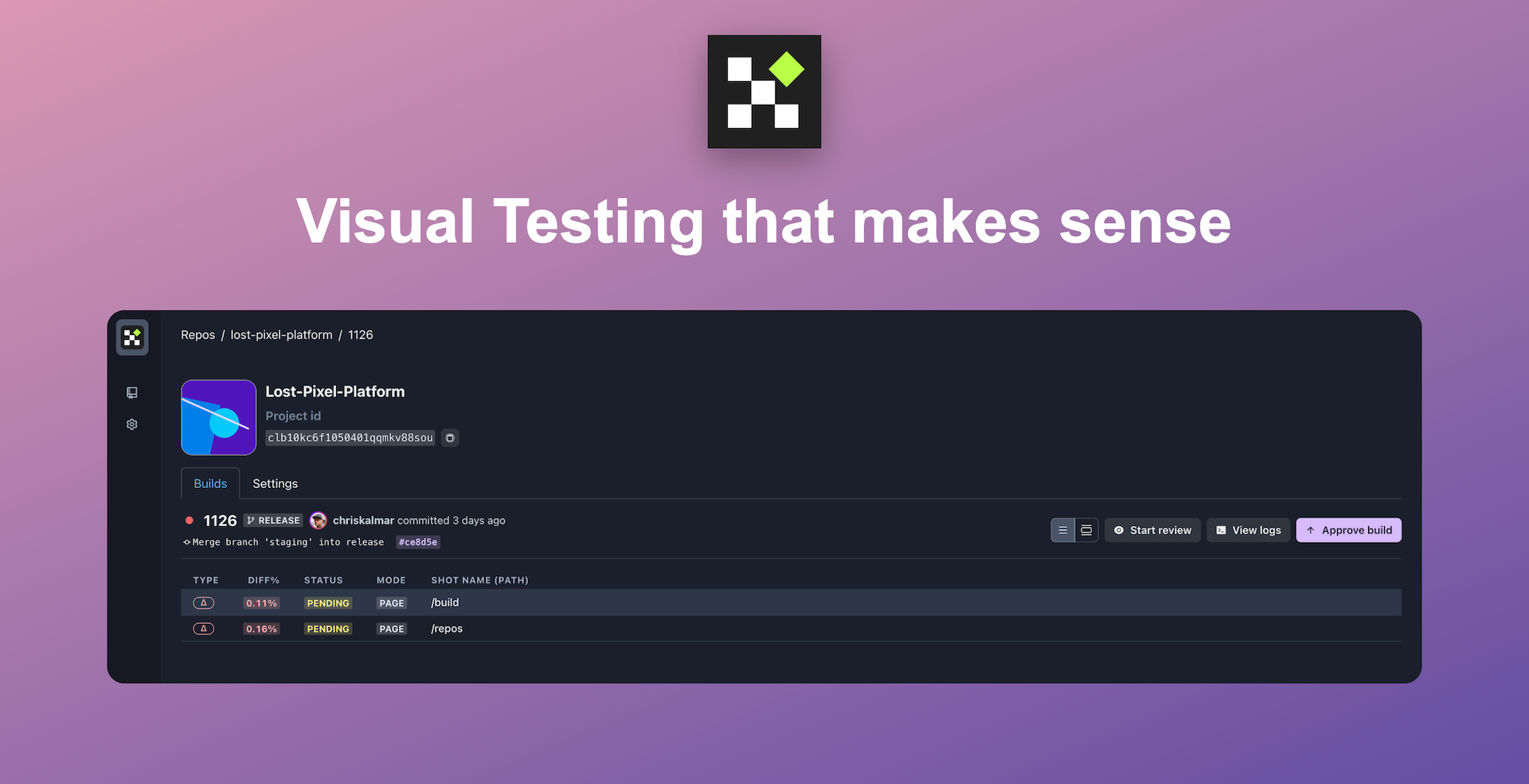

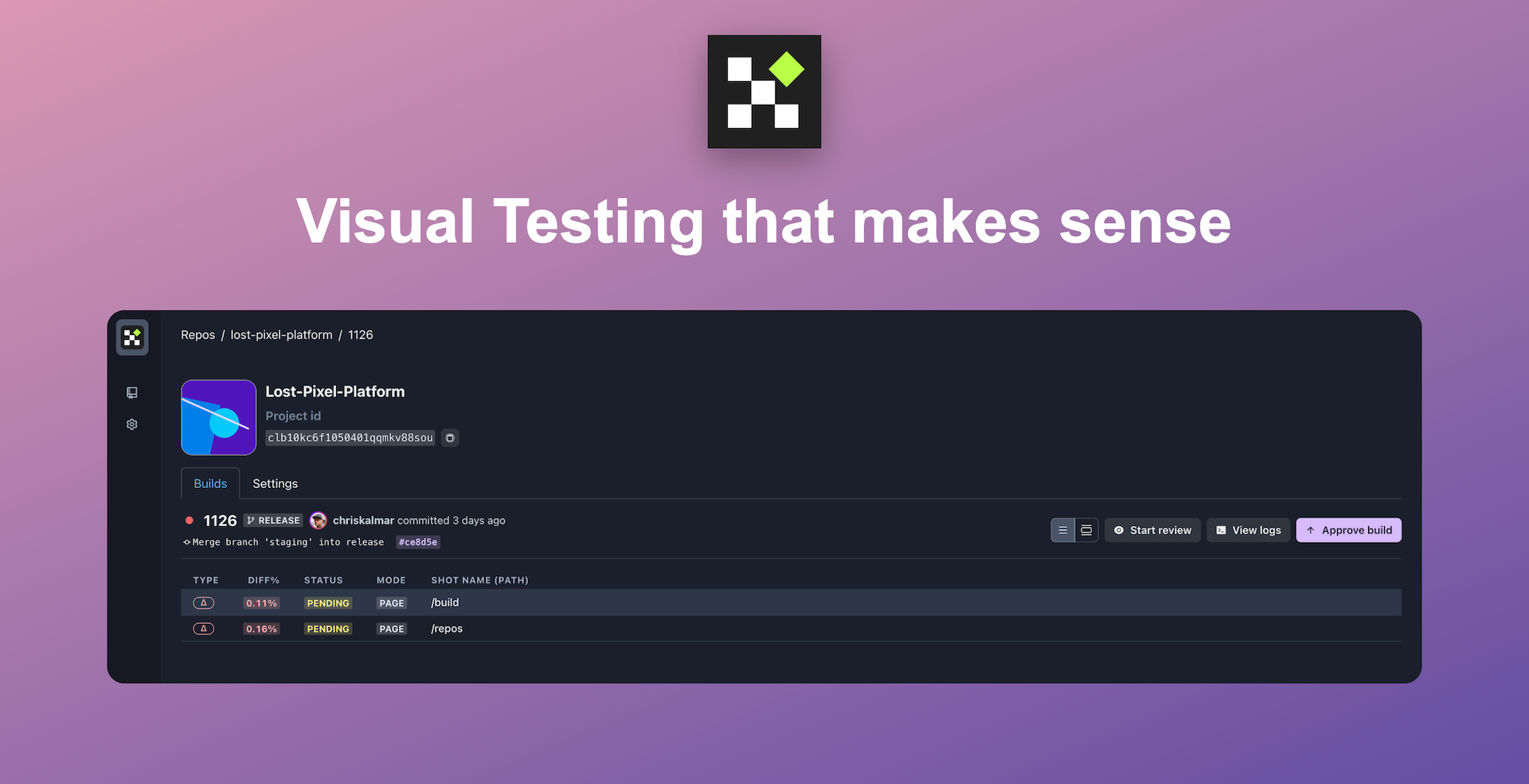

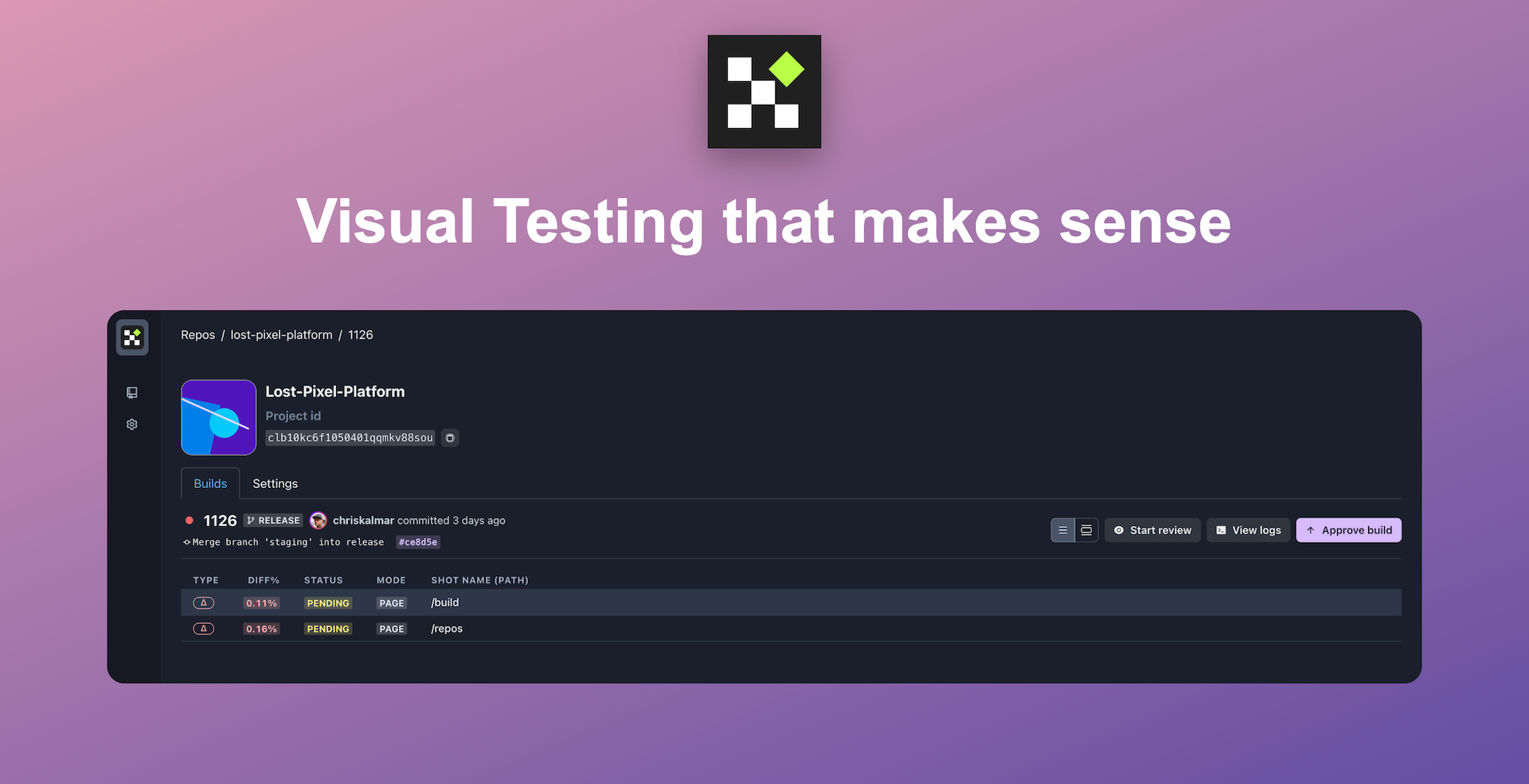

- Use Automation Tools: Tools like Lost Pixel can automatically rerun failed tests to check for flakiness.

Set up visual regression tests in minutes with Lost Pixel Platform. Do not let your users find bugs first.

How to Fix Flaky Tests?

Flaky tests can be a major problem in maintaining reliable software, but there are ways to fix them.

To identify the root causes, you need to have a deep understanding of the application under test (AUT).

Here are some strategies you can use to fix flaky tests:

Stabilize the Test Environment

Remove fluctuations in system resources, network, and hardware configurations to set up a clean and stable test environment.

This will provide a controlled environment to increase test reliability and reduce the risk of flakiness.

Here's how you can do this:

- Create a consistent environment that is as close to production conditions as possible.

- Use mocks or fakes to simulate the behavior of external systems or APIs. This makes your tests faster and more reliable since they are not waiting on or affected by the real systems.

- Before each test, make sure the state is clean. This involves refreshing the database or clearing the cache to provide a reliable starting point for each test.

Adjust Sync and Wait Times

By adjusting wait times, you can address timing issues that cause flakiness.

Use custom wait times based on specific conditions to remove the reliance on exact timing.

For example, if a test is waiting for an action to be completed, like a server response, it might fail if that response is delayed.

By synchronizing test steps with the system's state, tests can wait for a specific period of time or desired conditions before proceeding to check if the operation was successful.

Set up visual regression tests in minutes with Lost Pixel Platform. Do not let your users find bugs first.

Prepare Consistent Test Data

Unpredictable or random elements in your test data can lead to flaky test outcomes.

To make your tests more reliable:

- Avoid using data that can change between test runs, such as dynamic user inputs, time, or dates.

- Use fixed, predefined data to produce the same results every time the test runs.

- If your tests involve random elements, such as generating random data, use deterministic algorithms that will always produce the same output for any given input.

Manage Concurrency Issues

Identify the shared resources that cause conflict between tests running in parallel.

Here's how you can do this:

- Isolate the tests so they are fully independent and do not depend on any external factors or state set up by other tests.

- Implement locking mechanisms to control access to shared resources. It will make sure that only one test can access a resource at any given time.

- By locking critical sections, you can prevent race conditions. It is particularly useful in multithread environments that execute multiple parts of code at a time.

- Use containers (like Docker) to manage concurrent tests in large test suits. This way, you can run each test in its own sandboxed environment to avoid interference.

Tools to Identify And Fix Flaky Tests

Several tools are available to help find, fix, and manage flaky tests.

Here are some tools to monitor test behavior and implement solutions.

- Jenkins (for automatic test runs as part of the CI pipeline).

- TestNG (to identify and rerun flaky tests).

- Kubernetes (for test environment management).

- Lost Pixel (Visual regression testing tool to handle flaky tests).

Best Practices to Avoid Flaky Tests

Combine the above strategies with best practices to help prevent flaky tests and maintain software quality.

- Make sure the test steps are clear, concise, and accurate.

- Focus each test on a single functionality to avoid complications.

- Use descriptive names to easily identify, explain, and manage test cases.

- Write modular and reusable code without any typos for easy debugging.

- Run your tests frequently during development to reduce flaky tests.

- Integrate automated testing tools into your CI/CD pipeline for automatic flaky test retries.

- Use logs and test reports to monitor and address flaky tests before they become a larger issue.

- Regularly refactor tests to improve performance and maintainability.

- Document flaky test cases and solutions to prevent similar issues in the future.

Final Words

Flaky tests can be a major headache and disrupt your workflow.

By understanding their causes and implementing proper strategies and best practices, you can improve the test suite's quality and reduce the impact of flaky tests.

Set up visual regression tests in minutes with Lost Pixel Platform. Do not let your users find bugs first.

FAQs

What is the difference between a flaky test and a brittle test?

A flaky test fails unexpectedly even when the code remains the same, whereas a brittle test fails consistently anytime there is a minor change in the code or environment.

How to reduce flaky tests in Selenium?

To reduce flaky tests in Selenium, use proper synchronization and wait times, avoid relying on fixed sleep times, and run parallel tests on multiple machines.

About Dima Ivashchuk

Hey, I'm - Dima the co-founder of Lost Pixel. I like modern frontends, building stuff on the internet, and educating others. I am committed to building the best open-source visual regression testing platform!